Projects kill teams and flow

Given the No Projects definition of a project as “a fixed amount of time and money assigned to deliver a large batch of value add“, it is not surprising that for many organisations a new project heralds the creation of a Project Team:

A project team is a temporary organisational unit responsible for the implementation and delivery of a project

When a new project is assigned a higher priority than business as usual and the Iron Triangle is in full effect, there can be intense pressure to deliver on time and on budget. As a result a Project Team appears to be an attractive option, as costs and progress can be monitored in isolation, and additional personnel can be diverted to the project when necessary. Unfortunately, in addition to managing the increased risk, variability, and overheads associated with a large batch of value-add, a Project Team is fatally compromised by its coupling to the project lifecycle.

The process of forming a team of complementary personnel that establish a shared culture and become highly productive is denied to Project Teams from start to finish. At the start of project implementation, the presence of a budget and a deadline means a Project Team is formed via:

- Cannibalisation – impairs productivity as entering team members incur a context switching overhead

- Recruitment – devalues cultural fit and required skills as hiring practices are compromised

Furthermore, at the end of project delivery the absence of a budget or a deadline means a Project Team is disbanded via:

- Cannibalisation – impairs productivity as exiting team members incur a context switching overhead

- Termination – devalues cultural fit and acquired skills as people are undervalued

This maximisation of resource efficiency clearly has a detrimental effect upon flow efficiency. Cannibalising a team member objectifies them as a fungible resource, and devalues their mastery of a particular domain. Project-driven recruitment of a team member ignores Johanna Rothman’s advice that “when you settle for second best, you often get third or fourth best” and “if a candidate’s cultural preferences do not match your organisation, that person will not fit“. Terminating a team member denigrates their accumulated domain knowledge and skills, and can significantly impact staff morale. Overall this strategy is predicated upon the notion that there will be no further business change, and as Allan Kelly warns that “the same people are unlikely to work together again“, it is an extremely dangerous assumption.

The inherent flaws in the Project Team model can be validated by an examination of any professional sports team that has enjoyed a period of sustained success. For example, when Sir Alex Ferguson was interviewed about his management style at Manchester United he described his initial desire to create a “continuity of supply to the first team… the players all grow up together, producing a bond“. This approach fostered a winning culture that valued long-term goals over short-term gains, and led to 20 years of unrivalled dominance. It is unlikely that Manchester United would have experienced the same amount of success had their focus been upon a particular season at the expense of others.

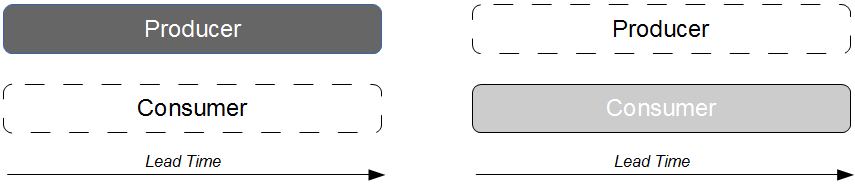

Therefore, the alternative to building a Project Team is to grow a Product Team:

A product team is a permanent organisational unit responsible for the continuous improvement of a product

Following Johanna’s advice to “keep teams of people together and flow the projects through cross-functional teams“, Product Teams are decoupled from project lifecycles and are empowered to pull in work as required. This enables a team to form a shared culture that reduces variability and improves stability, which as observed by Tobias Mayer “leads to enhanced focus and high performance“. Over a period of time a Product Team will master the relevant business and technical domains, which will fuel product innovation and produce a return on investment that rewards us for making the correct strategic decision of favouring products over projects.

![Economic Batch Size [Reinertsen]](https://www.stevesmith.tech/wp-content/uploads/2013/03/Economic-Batch-Size-Reinertsen-1024x852.jpg)