Pipeline updates must minimise risk to protect the Repeatable Reliable Process

We want to quickly deliver new features to users, and in Continuous Delivery Dave Farley and Jez Humble showed that “to achieve these goals – low cycle time and high quality – we need to make frequent, automated releases“. The pipeline constructed to deliver those releases should be no different and frequently, automatically released into Production itself. However, this conflicts with the Continuous Delivery principle of Repeatable Reliable Process – a single application release mechanism for all environments, used thousands of times to minimise errors and build confidence – leading us to ask:

Is the Repeatable Reliable Process principle endangered if a new pipeline version is released?

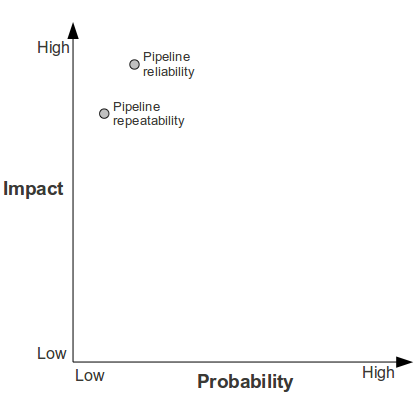

To answer this question, we can use a risk impact/probability graph to assess if an update will significantly increase the risk of a pipeline operation becoming less repeatable and/or reliable.

This leads to the following assessment:

- An update is unlikely to increase the impact of an operation failing to be repeatable and/or reliable, as the cost of failure is permanently high due to pipeline responsibilities

- An update is unlikely to increase the probability of an operation failing to be repeatable, unless the Published Interface at the pipeline entry point is modified. In that situation, the button push becomes more likely to fail, but not more costly

- An update is likely to increase the probability of an operation failing to be reliable. This is where stakeholders understandably become more risk averse, searching for a suitable release window and/or pinning a particular pipeline version to a specific artifact version throughout its value stream. These measures may reduce risk for a specific artifact, but do not reduce the probability of failure in the general case

Based on the above, we can now answer our original question as follows:

A pipeline update may endanger the Repeatable Reliable Process principle, and is more likely to impact reliability than repeatability

We can minimise the increased risk of a pipeline update by using the following techniques:

- Change inspection. If change sets can be shown to be benign with zero impact upon specific artifacts and/or environments, then a new pipeline version is less likely to increase risk aversion

- Artifact backwards compatibility. If the pipeline uses a Artifact Interface and knows nothing of artifact composition, then a new pipeline version is less likely to break application compatibility

- Configuration static analysis. If each defect has its root cause captured in a static analysis test, then a new pipeline version is less likely to cause a failure

- Increased release cadence. If the frequency of pipeline releases is increased, then a new pipeline version is more likely to possess shallow defects, smaller feedback loops, and cheaper rollback

Finally, it is important to note that a frequently-changing pipeline version may be a symptom of over-centralisation. A pipeline should not possess responsibility without authority and should devolve environment configuration, application configuration, etc. to separate, independently versioned entities.